The Blind Spot Exposed—Why Drift Became the Wake-Up Call for Embedded AI

From hypothetical to headline, how the Drift/Salesloft breach reveals the new hidden AI enterprise risk.

Chris Morosco, VP & Head of Marketing | Aurascape

September 3rd, 2025

🕑 10 minute read

TL;DR

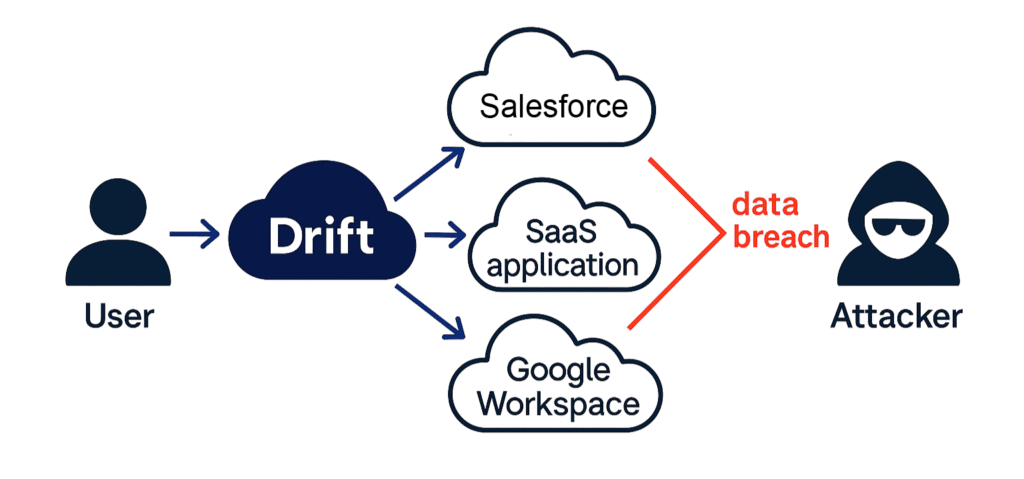

Traditional tools treated embedded AI as ordinary web traffic—and by design, their architectures can’t decode the real-time, custom protocols AI agents use. That left enterprises blind to user-level interactions. The Drift/Salesloft breach proved how dangerous that blind spot can be: attackers stole OAuth tokens and moved through trusted integrations into systems like Salesforce and Google Workspace, exposing data from some of the world’s most sophisticated companies, including Zscaler, Palo Alto Networks, and Cloudflare. Because legacy controls never saw these interactions, organizations have no record of what employees shared—and no way to reconstruct the impact now that the provider has been compromised.

The lesson is clear: enterprises must shift their focus forward. It’s not enough to react to SaaS-to-SaaS breaches on the back end; you need visibility and control at the user level, where employees interact with embedded AI in real time. That means knowing which bots are in play, controlling what data can be exchanged, and keeping a record of every interaction so you can prevent exposure and respond with confidence when the next breach hits.

From Hypothetical to Headlines

On August 1st, we published a blog describing a hypothetical scenario: an AI chatbot embedded in a widely used SaaS application is compromised; data flows through trusted integrations; and traditional security tools miss it because the AI is hidden inside an app they already trust. That scenario is no longer hypothetical. It is exactly what unfolded in the ongoing Drift/Salesloft incident, where attackers stole OAuth tokens and used them to access downstream systems such as Salesforce—and, in some cases, Google Workspace—through legitimate, pre-approved connections. Investigations by Google’s Threat Intelligence Group, independent reporters, and impacted enterprises continue to expand both the scope of the breach and the timeline of activity.

What Happened in the Drift Breach

Drift’s chatbot runs on vendor websites and often connects—via OAuth—to customers’ back-end systems so the bot can create or update records. In this campaign, the threat actor obtained Drift/Salesloft OAuth tokens and then used those valid credentials to query customers’ Salesforce environments directly, exfiltrating contacts, cases, attachments, and other data. Cloudflare’s public write-up details how the attackers systematically enumerated Salesforce objects, ran targeted SOQL queries, and exfiltrated records ranging from support cases to file attachments. Investigators also observed attempts to delete jobs and artifacts, a clear effort to reduce forensic evidence.

Salesforce was the primary system impacted, because Drift bots were commonly integrated there to log leads, tickets, and customer conversations. But it wasn’t the only one. Google has confirmed that OAuth tokens tied to the Drift Email integration were also compromised, allowing limited access to a small number of Google Workspace accounts. And because Drift supports dozens of integrations with other SaaS platforms—marketing automation, conferencing, analytics, and more—customers are being urged to treat any application connected to Drift via OAuth as potentially exposed.

Several high-profile companies have already confirmed impact. Zscaler disclosed that attackers accessed licensing data and customer case content. Palo Alto Networks confirmed that CRM records were exposed. Cloudflare reported attackers targeted support cases, tokens, and secrets, forcing the rotation of API keys. SpyCloud has also acknowledged that sensitive data was involved. The breach did not just put Salesforce CRM data at risk—it exposed valuable enterprise information across the broader SaaS ecosystem connected through Drift.

The Visibility Gap That Makes This Breach Worse

The Drift/Salesloft incident wasn’t just a back-end compromise—it exposed a longstanding visibility gap. Traditional firewalls and secure web gateways never classified interactions with embedded bots like Drift as AI traffic. To them, a page with a chat agent looked like any other website. And the problem runs deeper: their very architectures make it impossible to decode the real-time, custom-protocol communications that AI agents like Drift use. Legacy tools see only generic web sessions—they have no idea whether an employee just typed a prompt, uploaded a file, or exchanged sensitive data. Aurascape was designed differently: it dynamically supports these evolving AI protocols to give you visibility and control over the actual interaction, not just the wrapper around it.

That meant two things. First, if sensitive data was exchanged with a Drift bot, those controls never stopped it from leaving. Second—and more damaging in the current moment—they have no record of what was exchanged. With Drift’s back end now breached, enterprises are scrambling to answer basic questions: Which employees engaged Drift? What was shared? Did regulated data or customer IP end up in those systems?

And this problem doesn’t end with Drift. The hard truth is that neither the SaaS vendors or your traditional security tools will give you the full picture. The compromised AI agent won’t know what was shared. Not every SaaS vendor will disclose if they had it embedded or lost your data. And traditional security tools will never see the interactions, because they can’t see what was exchanged. Without visibility, you’re left in the dark. Aurascape changes that by recording every user-level interaction and correlating it to the SaaS app involved—so you know exactly where the agent was, who used it, and what was at risk.

The reality is sobering: because legacy tools never had visibility into these interactions, organizations are entering this breach blind. The true impact is unknown, not because attackers erased every trace, but because legacy tools never saw the conversations at all.

Embedded AI Is the New Enterprise Risk

For years, security programs focused on standalone AI applications—separate sites where users intentionally go to interact with a model. That lens is now outdated. The larger, more systemic challenge is embedded AI woven into the SaaS and websites businesses already rely on: sales chat on a marketing site, “assistants” in service desks, document classifiers in e-signature platforms, and AI helpers inside productivity suites.

Today it was Drift/Salesloft bridging to Salesforce and (for a small number of tenants) Google Workspace. Tomorrow it could be the “smart workflow” in DocuSign, a support assistant in your HR portal, or a data-entry helper inside financial software. The attack surface is no longer “Which AI sites do we allow?” It is “Where is AI already operating—often invisibly—inside apps we trust?”

Why This Matters for Enterprises

The breach highlights a critical blind spot. Sensitive data shared with embedded AI apps like Drift doesn’t just vanish into the ether—it resides in third-party systems you don’t directly control. When those providers are breached, you need to know exactly what your users shared, who they shared it with, and whether regulated or confidential data was involved. Without visibility, organizations are left scrambling in the dark, unable to measure their own exposure.

Enterprises can’t afford to wait for the next embedded-AI provider to fall. The answer isn’t more after-the-fact SaaS triage—it’s moving the control point forward.

How Aurascape Is Different

Aurascape’s strength lies in giving you visibility and control over the user-level interaction between your employees and embedded AI applications wherever they appear. We identify AI interactions even when they occur on ordinary websites or within SaaS UIs, classify that traffic as AI (not just generic web), and analyze the conversation in real time so you can block risky prompts or prevent sensitive files from being exchanged before they leave your control.

That means two things: first, you prevent sensitive data from being exposed in the moment. Second, you retain an authoritative record of who engaged with which embedded agent, what was attempted to be shared, and—critically—which SaaS application those interactions touched. When a provider’s back end is later breached—as happened with Drift/Salesloft—you’re not left guessing. You already know which apps had the compromised agent embedded, who used it, and what data was exchanged.

Your Action Plan: Past, Present, and Future

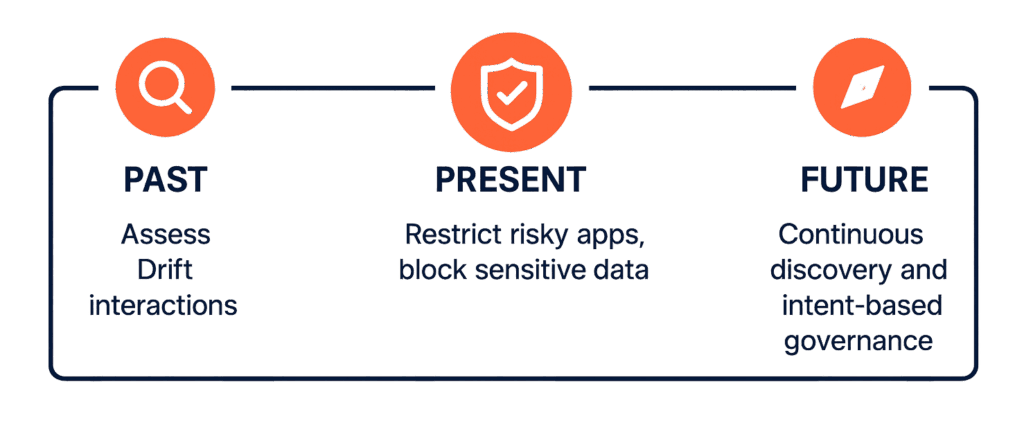

When an embedded-AI provider is breached, enterprises need more than headlines—they need a framework to act. It’s not enough to know that a compromise happened; you must be able to assess your own exposure, contain ongoing risk, and strengthen resilience against the next incident. Aurascape helps you do this across three horizons: looking back to understand impact, acting in the present to protect data, and preparing for the future as embedded AI spreads across the SaaS ecosystem.

Past. If you already run Aurascape, you have visibility into every Drift interaction and can instantly assess impact. You can see which vendors’ Drift bots your employees engaged with, review the full conversation context to determine whether protected data was attempted, and demonstrate where our inline protections blocked sensitive exchanges. Using Auri, you can query “all Drift conversations in the last 90 days by vendor domain and user” and map those interactions directly to the SaaS applications that were exposed. That clarity is critical. With hundreds of SaaS apps in use across a large enterprise, the question after a breach is simple but urgent: which apps had the compromised agent embedded, and who used it? No AI agent, SaaS vendor, or legacy tool will give you that answer. Aurascape does—delivering the authoritative record you need to fully investigate impact.

Present. Start by restricting high-risk apps, preventing sensitive data exchange, and enabling conversation logging. First, control which embedded-AI apps your employees can access. Treat high-risk tools like Drift, Intercom, and Freshchat as restricted until you are confident in your protections. Second, ensure that sensitive data cannot be exchanged with any embedded AI. This means applying real-time inspection at the point of use to block customer records, financial data, source code, or credentials before they leave your environment. Third, establish conversation logging and auditing. Having a complete record of who engaged with which bot, what was asked, and what was exchanged is essential for compliance and for conducting post-incident investigations.

Future. Recognize this is only the beginning. Embedded AI is spreading rapidly across SaaS and websites—well beyond chat widgets. To build resilience, organizations need continuous discovery of where these AI agents live, policies that govern use by intent rather than coarse app blocking, and the ability to enforce protections at the conversation level. Aurascape was designed for this reality: we make AI usage visible, enforce guardrails to keep sensitive data safe, and give you the logs you need to prove compliance and quickly assess impact when providers are breached.

What To Do Now

Assume you already have embedded AI in your environment—even where no one labeled it “AI.” Most importantly, shift your control point forward—from trying to secure opaque SaaS-to-SaaS back ends you cannot see, to governing the user interactions that feed them. That is how you prevent exposure, and it is how you retain the evidence you will need when (not if) the next embedded-AI provider suffers a breach. If you want help standing this up quickly, Aurascape is ready to partner with you.

See it first-hand: Schedule a walkthrough today to discover how Aurascape makes the hidden visible, governs embedded AI in real time, and closes the blind spot before the next breach.

Aurascape Solutions

- Discover and monitor AI Get a clear picture of all AI activity.

- Safeguard AI use Secure data and compliancy in AI usage.

- Copilot readiness Prepare for and monitor AI Copilot use.

- Coding assistant guardrails Accelerate development, safely.

- Frictionless AI security Keep users and admins moving.